Neural Networks Cheat Sheet. This thread is archived. New comments cannot be posted and votes cannot. Tech Cheat Sheets CLOUD Big data & Ai Cheat Sheets for AI, Machine Learning, Neural Networks, Big Data & Deep Learning I have been collecting AI cheat sheets for the last few months, and I’ve been sharing them with friends and colleagues from time to time. Recently, a lot of inquiries concerning the same sheets Continue reading 'Cheat Sheets for AI, Machine Learning, Neural Networks, Big.

Contents

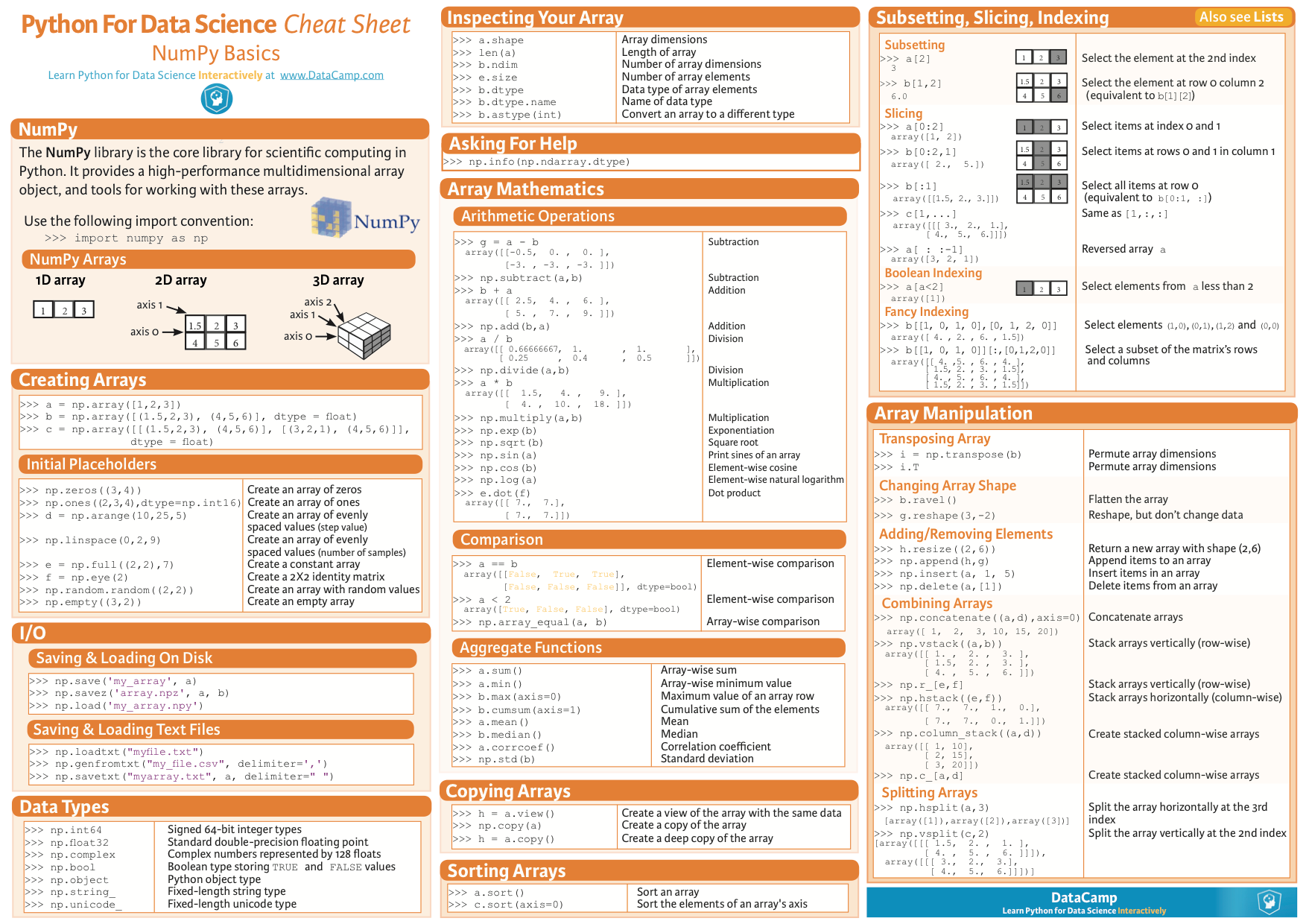

Check out this collection of high-quality deep learning cheat sheets, filled with valuable, concise information on a variety of neural network-related topics. Of data science for kids. Or 50% off hardcopy. By Matthew Mayo, KDnuggets. Shervine Amidi, graduate student at Stanford, and Afshine Amidi, of MIT and Uber - creators of a recent set of. I have researched for more than 35 days to find out all the cheatsheets on machine learning, deep learning, data mining, neural networks, big data, artificial intelligence, python, Tensorflow, scikit-learn, etc from all over the web. To make it easy for all users, I have zipped over 100+ Cheat sheets and shared it in this post. By Afshine Amidi and Shervine Amidi Overview. Architecture of a traditional RNN Recurrent neural networks, also known as RNNs, are a class of neural networks that allow previous outputs to be used as inputs while having hidden states.

Algorithms

Deep Learning

Optimizers

| neupy.algorithms.Momentum | Momentum algorithm. |

| neupy.algorithms.GradientDescent | Mini-batch Gradient Descent algorithm. |

| neupy.algorithms.Adam | Adam algorithm. |

| neupy.algorithms.Adamax | AdaMax algorithm. |

| neupy.algorithms.RMSProp | RMSProp algorithm. |

| neupy.algorithms.Adadelta | Adadelta algorithm. |

| neupy.algorithms.Adagrad | Adagrad algorithm. |

| neupy.algorithms.ConjugateGradient | Conjugate Gradient algorithm. |

| neupy.algorithms.QuasiNewton | Quasi-Newton algorithm. |

| neupy.algorithms.LevenbergMarquardt | Levenberg-Marquardt algorithm is a variation of the Newton’s method. |

| neupy.algorithms.Hessian | Hessian gradient decent optimization, also known as Newton’s method. |

| neupy.algorithms.HessianDiagonal | Algorithm that uses calculates only diagonal values from the Hessian matrix and uses it instead of the Hessian matrix. |

| neupy.algorithms.RPROP | Resilient backpropagation (RPROP) is an optimization algorithm for supervised learning. |

| neupy.algorithms.IRPROPPlus | iRPROP+ is an optimization algorithm for supervised learning. |

Regularizers

| neupy.algorithms.l1 | Applies l1 regularization to the trainable parameters in the network. |

| neupy.algorithms.l2 | Applies l2 regularization to the trainable parameters in the network. |

| neupy.algorithms.maxnorm | Applies max-norm regularization to the trainable parameters in the network. |

Learning rate update rules

| neupy.algorithms.step_decay | Algorithm minimizes learning step monotonically after each iteration. |

| neupy.algorithms.exponential_decay | Applies exponential decay to the learning rate. |

| neupy.algorithms.polynomial_decay | Applies polynomial decay to the learning rate. |

Neural Networks with Radial Basis Functions (RBFN)

| neupy.algorithms.GRNN | Generalized Regression Neural Network (GRNN). |

| neupy.algorithms.PNN | Probabilistic Neural Network (PNN). |

Autoasociative Memory

| neupy.algorithms.DiscreteBAM | Discrete BAM Network with associations. |

| neupy.algorithms.CMAC | Cerebellar Model Articulation Controller (CMAC) Network based on memory. |

| neupy.algorithms.DiscreteHopfieldNetwork | Discrete Hopfield Network. |

Competitive Networks

| neupy.algorithms.ART1 | Adaptive Resonance Theory (ART1) Network for binary data clustering. |

| neupy.algorithms.GrowingNeuralGas | Growing Neural Gas (GNG) algorithm. |

| neupy.algorithms.SOFM | Self-Organizing Feature Map (SOFM or SOM). |

| neupy.algorithms.LVQ | Learning Vector Quantization (LVQ) algorithm. |

| neupy.algorithms.LVQ2 | Learning Vector Quantization 2 (LVQ2) algorithm. |

| neupy.algorithms.LVQ21 | Learning Vector Quantization 2.1 (LVQ2.1) algorithm. |

| neupy.algorithms.LVQ3 | Learning Vector Quantization 3 (LVQ3) algorithm. |

Neural Network Cheat Sheet Printable

Associative

| neupy.algorithms.Oja | Oja is an unsupervised technique used for the dimensionality reduction tasks. |

| neupy.algorithms.Kohonen | Kohonen Neural Network used for unsupervised learning. |

| neupy.algorithms.Instar | Instar is a simple unsupervised Neural Network algorithm which detects associations. |

| neupy.algorithms.HebbRule | Neural Network with Hebbian Learning. |

Boltzmann Machine

| neupy.algorithms.RBM | Boolean/Bernoulli Restricted Boltzmann Machine (RBM). |

Layers

Layers with activation function

| neupy.layers.Linear | Layer with linear activation function. |

| neupy.layers.Sigmoid | Layer with the sigmoid used as an activation function. |

| neupy.layers.HardSigmoid | Layer with the hard sigmoid used as an activation function. |

| neupy.layers.Tanh | Layer with the hyperbolic tangent used as an activation function. |

| neupy.layers.Relu | Layer with the rectifier (ReLu) used as an activation function. |

| neupy.layers.LeakyRelu | Layer with the leaky rectifier (Leaky ReLu) used as an activation function. |

| neupy.layers.Elu | Layer with the exponential linear unit (ELU) used as an activation function. |

| neupy.layers.PRelu | Layer with the parametrized ReLu used as an activation function. |

| neupy.layers.Softplus | Layer with the softplus used as an activation function. |

| neupy.layers.Softmax | Layer with the softmax activation function. |

Convolutional layers

| neupy.layers.Convolution | Convolutional layer. |

| neupy.layers.Deconvolution | Deconvolution layer (also known as Transposed Convolution.). |

Recurrent layers

| neupy.layers.LSTM | Long Short Term Memory (LSTM) Layer. |

| neupy.layers.GRU | Gated Recurrent Unit (GRU) Layer. |

Pooling layers

| neupy.layers.MaxPooling | Maximum pooling layer. |

| neupy.layers.AveragePooling | Average pooling layer. |

| neupy.layers.Upscale | Upscales input over two axis (height and width). |

| neupy.layers.GlobalPooling | Global pooling layer. |

Normalization layers

| neupy.layers.BatchNorm | Batch normalization layer. |

| neupy.layers.GroupNorm | Group Normalization layer. |

| neupy.layers.LocalResponseNorm | Local Response Normalization Layer. |

Stochastic layers

| neupy.layers.Dropout | Dropout layer. |

| neupy.layers.GaussianNoise | Add gaussian noise to the input value. |

| neupy.layers.DropBlock | DropBlock, a form of structured dropout, where units in a contiguous region of a feature map are dropped together. |

Merge layers

| neupy.layers.Elementwise | Layer merges multiple input with elementwise function and generate single output. |

| neupy.layers.Concatenate | Concatenate multiple inputs into one. |

| neupy.layers.GatedAverage | Layer uses applies weighted elementwise addition to multiple outputs. |

Other layers

| neupy.layers.Input | Layer defines network’s input. |

| neupy.layers.Identity | Passes input through the layer without changes. |

| neupy.layers.Reshape | Layer reshapes input tensor. |

| neupy.layers.Transpose | Layer transposes input tensor. |

| neupy.layers.Embedding | Embedding layer accepts indices as an input and returns rows from the weight matrix associated with these indices. |

Operations

Additional operations that can be performed on the layers or graphs

| neupy.layers.join(*networks) | Sequentially combines layers and networks into single network. |

| neupy.layers.parallel(*networks) | Merges all networks/layers into single network without joining input and output layers together. |

| neupy.layers.repeat(network_or_layer, n) | Function copies input n - 1 times and connects everything in sequential order. |

Architectures

Neural Network Architecture Cheat Sheet

| neupy.architectures.vgg16 | VGG16 network architecture with random parameters. |

| neupy.architectures.vgg19 | VGG19 network architecture with random parameters. |

| neupy.architectures.squeezenet | SqueezeNet network architecture with random parameters. |

| neupy.architectures.resnet50 | ResNet50 network architecture with random parameters. |

| neupy.architectures.mixture_of_experts | Generates mixture of experts architecture from the set of networks that has the same input and output shapes. |

Parameter initialization

| neupy.init.Constant | Initialize parameter that has constant values. |

| neupy.init.Normal | Initialize parameter sampling from the normal distribution. |

| neupy.init.Uniform | Initialize parameter sampling from the uniform distribution. |

| neupy.init.Orthogonal | Initialize matrix with orthogonal basis. |

| neupy.init.HeNormal | Kaiming He parameter initialization method based on the normal distribution. |

| neupy.init.HeUniform | Kaiming He parameter initialization method based on the uniformal distribution. |

| neupy.init.XavierNormal | Xavier Glorot parameter initialization method based on normal distribution. |

| neupy.init.XavierUniform | Xavier Glorot parameter initialization method based on uniform distribution. |

Datasets

| neupy.datasets.load_digits | Returns dataset that contains discrete digits. |

| neupy.datasets.make_digits | Returns discrete digits dataset. |

| neupy.datasets.make_reber | Generate list of words valid by Reber grammar. |

| neupy.datasets.make_reber_classification | Generate random dataset for Reber grammar classification. |

Artificial Intelligence is the buzzword that surrounds societies, companies and the public – being predicted as THE BUZZWORD for the upcoming year. But what is artificial intelligence in particular? Ramya has wrapped up a “Cheat Sheet” for you to check and maybe also update your knowledge on A.I. and Neural Nets.

Important to understand that neural nets in fact suffer from black box issues. That is, you cannot infer how a neural net obtains it’s results. So, being with a semantic project based on language and words or an illustrative project based on pictures, neural nets will not be able to tell you how the result was generated. Or are there workarounds? See for yourself.

Neural Network Cheat Sheet

About the Author: Ramya Raghuraman

Ramya currently pursues her Master’s degree in Data Analytics, specializing in Machine Learning and Deep Learning, from University of Hildesheim in Hildesheim (Germany) and holds a Bachelor’s degree in Electronics & Communication from Kumaraguru College of Technology (India). She also has a year’s experience working as a PL/SQL developer at Accenture Solutions (Bangalore).

“I was always amazed to see how technology gradually evolved; filling the gaps in all dimensions of human’s life. Even in my prime, playing with numbers always made me inquisitive! This small spark, has now transformed into the desire towards understanding the behavioral traits in analytics. My passion to explore the realms of Data Science and enthusiasm to unbox the concepts of Machine Learning, pushed me to start a career in these fields. Using today’s plethora of data and cutting edge technologies, helping organizations make optimized decisions has never been easier.”

Downloads:

You can download the entire Neural Networks Cheat Sheet here: Download Cheat Sheet

Neural Network Software

Sources: Photo by Campaign Creators on Unsplash